Coming together for ethical AI: Key takeaways from Data Natives 2024

Data Natives, Berlin. Photo: Lotta Holmström

At the Data Natives symposium in Berlin, held from 22-23 October 2024, a select group of AI experts, policymakers, creatives and innovators explored the complex intersection of artificial intelligence, ethics, and society. It was a quite unusual conference – about half the time was spent in intense discussions in smaller groups, delving deeply into critical questions. The goal was to transform our collective insights into a shared document to shape ongoing discourse, possibly sometime this spring.

The break-out session I attended, titled Hallucinations, Mis-/Disinformation, was facilitated by British tech journalist, entrepreneur and advisor Monty Munford along with tech ethicist and philosopher Alice Thwaite. I loved how the diversity of backgrounds and experiences in the group contributed to a lively discussion and exchange of perspectives.

Here are some of the key takeaways from an inspiring two-day deep dive into AI’s ethical landscape.

“2050 – Imagining the Good AI“

While the idea of having the year 2050 in focus in our discussions was a bit ludicrous – how do we even predict where AI will stand a year or two from now? – it served as a pointer to the conference’s ethical theme: How to make sure the AI applications we develop and use today are in line with the future we want to see?

One of the core questions was how ethical considerations can be embedded into AI development. Several speakers emphasized the need to foster a culture of accountability and transparency in AI.

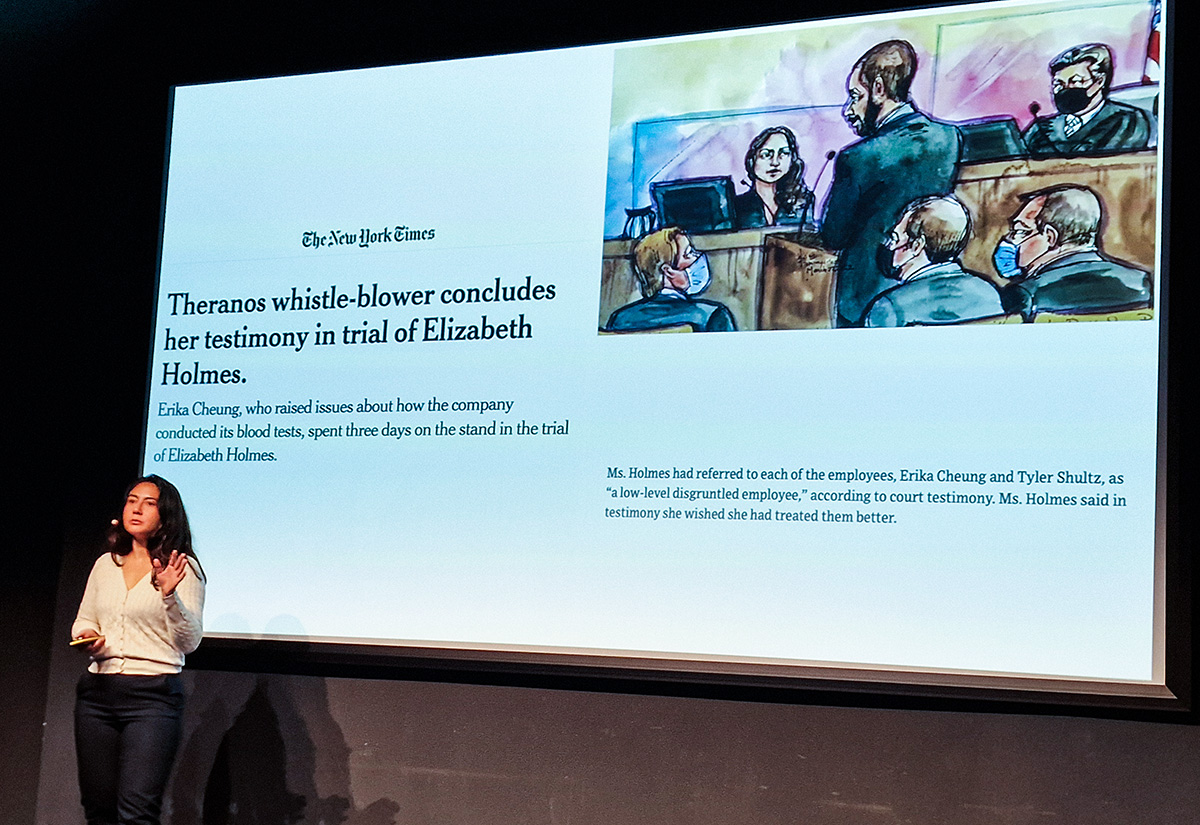

This need was underscored with cautionary tales like that of Theranos, illustrating how unchecked ambition can erode public trust, potentially causing long-lasting harm across entire industries.

Erika Cheung, a former Theranos employee and a key whistleblower in the company’s downfall, provided a stark reminder of what can go wrong when ethics are sidelined in the pursuit of innovation. Cheung’s personal experience, including facing harassment and legal battles for speaking out, highlighted the heavy price that individuals and society pay for unethical practices. Her story served as a powerful call for collective action to prevent similar situations from arising in the AI field.

Erika Cheung said testifying in the federal trial against Theranos was one of the lowest points in her life. “It was incredibly difficult.” Photo: Lotta Holmström

Reflecting on such examples, speakers highlighted the broader social responsibility AI carries—not just to solve problems but to avoid introducing new risks. Transparency, accountability, and a culture that places ethics alongside innovation were proposed as foundational pillars for responsible AI.

Regulating a Moving Target: The EU AI Act and Beyond

The new European AI Act, and its effect on innovation, sparked one of the most intense discussions at Data Natives. Some participants saw this legislation as a means to positioning the EU as a global leader in ethical AI, setting a high bar for responsible development and use. Others raised concerns that strict regulations might stifle innovation and weaken Europe’s global competitiveness.

– Europe is not in any way a leader of technology. We counter-act that by regulation. Whether that works out in the long run, I have my doubts, said attorney Dr. Alexander Jüngling in one of the panel discussions.

The regulations panel at Data Natives. Photo: Lotta Holmström

Louis M. Morgner, co-founder at startup Jamie, became the spokesperson for many of the entrepreneurs at the symposium when he voiced their concerns. As all the major foundational language models are developed outside of the EU, new features are not available to EU companies or users, or available after several months, as a consequence of the AI Act.

– If we are always lagging behind, our capability is much smaller. We are seriously hurting ourselves in this way. In the end it is about speed in innovation.

Morgner said that his company might even move to the US as a consequence of the EU regulations.

Pro-regulation participants said it could foster trust in AI, leading to greater adoption and ultimately benefiting both businesses and society.

– Regulation is not meant to do innovation. Even if we think in terms of innovation, one of the main things within AI is the lack of trust. The AI Act mandates those who build AI to build AI that is safer. Then you can increase the trust, said Dan Nechita, who co-led the technical negotiations on the AI Act in the EU Parliament.

An interesting question was whether Europe can, or should, act alone in this matter or if international cooperation is necessary to build a sustainable AI future.

Attendees agreed that the AI Act must be adaptable to keep pace with AI’s rapid evolution, noting that the LLMs were only added to it last minute.

– We’re trying to regulate a fast-moving train, said Dr. Alexandra Diening, a neuroscientist, author and founder of the Human-AI Symbiosis Alliance.

Data quality and explainability

The importance of data quality cannot be emphasized enough. Especially in applications where biased or faulty data can have devastating consequences, like in healthcare or law enforcement.

In a world where vast amounts of data are generated every second, it’s easy to assume that “more is better.” However, speakers highlighted the limitations of relying solely on vast datasets, arguing that smaller, well-curated datasets can often be more effective for training AI models, especially for specialized applications.

Participants stressed the importance of understanding how AI models arrive at their decisions, ensuring that these decisions are fair, unbiased, and accountable. But how do you explain the inner workings of complex systems to a general public in a way that makes it easy to understand and builds trust? Even people working with AI, like researchers and developers, need tools and techniques to aid in interpreting AI models and identify potential biases or errors.

The consensus was that if the field prioritizes quality and accountability, these systems can make more equitable decisions, contributing positively to society.

Combatting disinformation and deepfakes

In our break-out session discussions, Monty Munford asked me if I’d fall for fake news, and without a doubt: yes. It would be foolish to believe that as a journalist I am immune to being fooled. Sure, I might have better tools to see through such campaigns than an average person, but there are ways to trick anyone.

In both journalism and content creation, AI’s great potential to both inform and deceive places an enormous responsibility on developers and regulators to prioritize truthful outputs, as well as on journalists to not repeat stuff that’s simply not true.

With generative AI’s growing ability to create realistic but false content, questions were raised about AI’s accountability in the spread of misinformation. The onslaught of fake news and disinformation campaigns threatens to erode public trust in journalism.

Maxim Nitsche, Deep. Photo: Lotta Holmström

Maxim Nitsche, founder and CEO at the startup Deep, presented an interesting effort to combat such threats.

– All these tools do everything in their power to combat the fourth estate. That’s honestly terrifying. Now, can we fix it? I don’t believe we’re doomed, he said.

They are building a system for fact-checking news and social media in real-time. Nitsche talked about the necessary steps to make this happen. The first is filtering out the information that needs checking, as you cannot check everything. The second is the actual fact-checking, and there a human needs to be in the loop, Nitsche said. Lastly, is it possible to flag all the copies of this information, so that the spreaders of dis-information can’t just make new copies that evade the check. Maxim Nitsche’s claim is that they have solved step 1 and 3.

The company is now in talks with publishers and social media platforms – “all of them”, he said. Very interesting indeed.

AI to augment human creativity

AI’s role in creative industries spurred dialogue around the evolving relationship between humans and technology. While some view AI as a threat to traditional creative roles, others argued that AI can be a powerful tool to augment human creativity rather than replace it.

Bernie Su, a writer, director, and producer known for his innovative use of technology in storytelling, offered a nuanced perspective on AI’s role in creative processes. He argued that AI could be a valuable tool for artists but stressed that human intentionality and expertise remain essential. He also acknowledged the anxieties that many artists feel about AI, drawing parallels with historical fears surrounding new technologies.

– Yes, it’s disrupting jobs. But that’s in the history of everything, like coal mining… Creativity is going through this process. You can hate the system. But if you can’t tell if it’s AI, it doesn’t matter, he said.

Independent researcher and transdisciplinary artist Portrait XO spoke in similar terms.

– There is a historical trend for us humans to fear how new technologies might destroy how we do things, she said, pointing to synthesizers vs pianos, electronic guitars vs acoustic ones and pencils vs pens.

She has transformed datasets like a century of world temperature anomalies into music with the help of smart AI tools, addressing the UN’s 17 Sustainable Development Goals.

– A lot of exciting things happen when you use your own small data, and smaller language models as well, she said, pointing to an urgency for art, science and tech to come together around these big issues.

Many argued for the importance of preserving the human element in creativity while harnessing AI’s potential. One of them was Boris Eldagsen, a photographer known for his work with AI-generated images. He emphasised that AI lacks intentionality and requires human prompting and evaluation. Eldagsen views AI as a collaborative partner, a “choir” that he, as the conductor, guides to achieve his artistic vision. His perspective highlighted the potential for AI to augment human creativity, allowing artists to explore new possibilities and push the boundaries of their craft.

Tangible solutions to real-world problems

While the excitement surrounding AI was palpable at Data Natives, there was also a clear call for moving beyond the hype and focusing on tangible applications and real-world impact. Several times I heard people express a longing for the hype to end, the bubble to burst, and other such terms.

Speakers emphasized the need for AI solutions that address specific problems, create value, and benefit society as a whole. This sentiment resonated with the growing awareness of the potential downsides of AI, such as bias, job displacement, and the environmental impact of large-scale AI models.

A field where AI has the potential to be very beneficial is healthcare. Dr. Anita Puppe, an expert on AI in healthcare at IBM iX, spoke about how AI is transforming healthcare by assisting with diagnostics, treatment planning and patient monitoring.

Future applications may include predictive analysis, automated workflows and personalized medicine. These raise a ton of ethical challenges, but if handled responsibly they could also lead to great gains. The roadmap Dr. Puppe described includes incorporating ethical principles in the development process, having the system provide clear explanations of how it makes its decisions and close monitoring of AI system for potential biases or unintended consequences.

She was also one of the few speakers who actually dared to envision the world in 2050:

Dr. Anita Puppe’s presentation on AI innovation in healthcare. Photo: Lotta Holmström

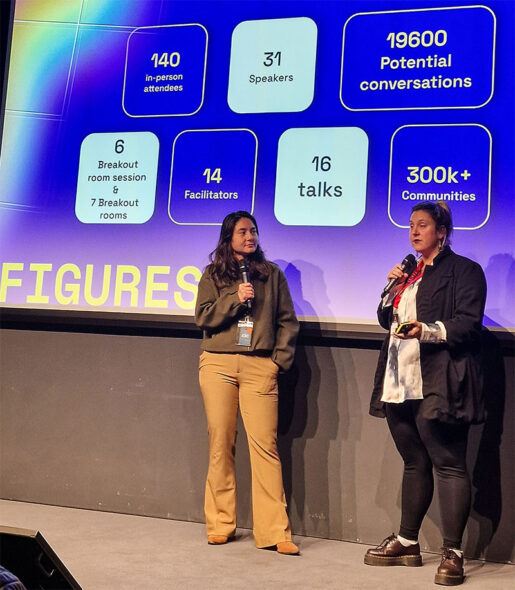

Data Natives in figures, delivered by organizers Erika Cheung and Elena Poughia. Photo: Lotta Holmström

Coming together

Perhaps the most central message from Data Natives was the importance of cross-disciplinary discussions and collaboration between fields – from developers and legal experts to journalists and creatives. Speakers emphasised the need for diverse perspectives, open dialogue, and shared responsibility in shaping the future of ethical and responsible AI. By coming together and exchanging perspectives, we can better understand the challenges and opportunities that AI brings.

In an environment that encourages such dialogues, the AI community can move beyond innovation for its own sake to build solutions that genuinely benefit humanity. As AI’s societal role continues to expand, Data Natives offered a timely reminder that ethical development must be a collective effort, grounded in respect, transparency, and a shared commitment to the public good.

Rounding up two intense days, organizers Elena Poughia and Erika Cheung said that this is only the beginning, lining up the process leading to a bigger event in the spring. I would love to take part in that as well.

What is your take on ethics in AI, where we are headed with the rapid development of generative AI? What is the potential and what risks are involved? I would love to hear your take in the comments.

More photos from the event can be found on my Flickr account.

1 Response

[…] From the get-go, our main teacher at ITHS, Ali Leylani, emphasized the importance of building a network with other people in the AI sphere. This is only one of the reasons for attending meetups and seminars on AI. Another is to get some understanding of the field, how it spans over many different areas and technologies. I have thoroughly enjoyed going to local Stockholm events, online seminars and also taking the leap and applying for – and getting accepted – a sponsored ticket to Data Natives in Berlin. You can read about my main takeaways from the latter in this blog post. […]